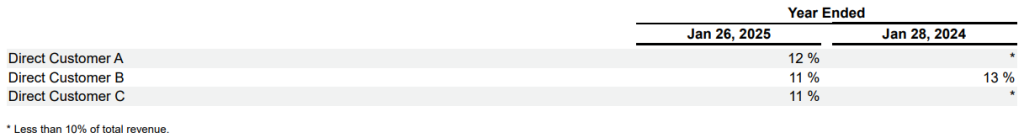

The fortune of Nvidia (NASDAQ:NVDA) is closely tied to Big Tech hyperscalers. Although the AI/GPU designer didn’t name its largest clients in the latest 10-K filing on Wednesday, Nvidia’s “Direct Customers” A, B, and C accounted for 36% of the company’s total revenue for FY2024.

Given that these sales come from Nvidia’s Compute & Networking division, it is safe to say these clients are likely from the Big Tech cluster such as Microsoft (NASDAQ:MSFT), Alphabet (NASDAQ:GOOGL), Amazon (NASDAQ:AMZN), Meta Platforms (NASDAQ:META) and even Tesla (NASDAQ:TSLA) for its Grok 3 integration.

In turn, this dynamic between Big Tech and Nvidia is critical for the company’s valuation. When the more cost-effective DeepSeek AI model came under the public spotlight, Nvidia’s valuation plummeted by over $600 billion at the end of January, the largest panic sale in US stock history.

After all, reduced assumed compute power means fewer data centers for Big Tech hyperscalers, lowering demand for Nvidia’s AI chips. In other words, NVDA shareholders should account for the concentration risk in Nvidia’s client profile. But how big of a risk is it?

Is Microsoft Indicative of Data Center Slowdown Risk?

Over the week, rumors sprung that Microsoft is scaling back on data center infrastructure. Namely, analysts from TD Cowen brokerage speculated, based on supply chain channels, that Microsoft cancelled data center leases worth “a couple of hundred megawatts” worth of capacity.

Such a rumor perfectly complemented the DeepSeek fallout. However, Microsoft promptly dismissed this speculation as unfounded.

“Last year alone, we added more capacity than any prior year in history. While we may strategically pace or adjust our infrastructure in some areas, we will continue to grow strongly in all regions.”

Microsoft spokesperson to CNBC

Microsoft emphasized that there is no shift in “any change to their DC [data center] strategy”. By the end of fiscal 2025, it is expected that the company will deploy ~$80 billion into DC infrastructure, most of it located in the US.

For those who paid attention to the latest ASML (AS:ASML) earnings, Microsoft’s rebuke of these rumors is not surprising. ASML CEO Christophe Fouquet believes that DeepSeek’s compute cost optimizations will only serve to boost AI market penetration:

“A lower cost of AI could mean more applications. More applications means more demand over time. We see that as an opportunity for more chips demand,”

It is anyone’s guess how big of a client Microsoft is to Nvidia, but the aforementioned 36% share of the company’s revenue ($35.1B) is relatively equally divided between three “Direct Customers”, at 12% (A) and 11% (B and C).

Ending October for Q3, Direct Customers A, B, and C fully equalized at 12% quarterly revenue each. Given Microsoft’s $14 billion investment in OpenAI by the end of 2024, in addition to its Azure cloud compute infrastructure, it is likely that Microsoft is “Direct Customer B” based on last year’s prominent position of 13%.

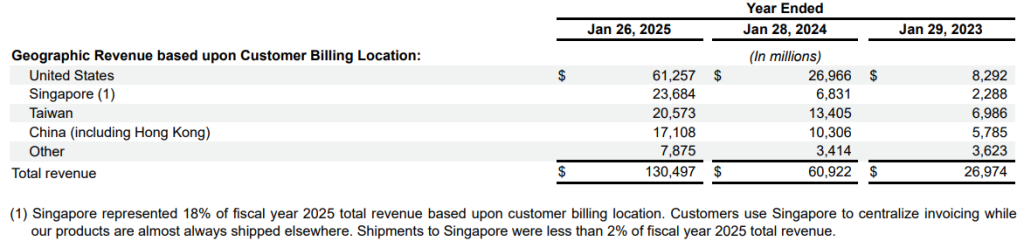

By region, notwithstanding Singapore as invoicing and distribution hub, Nvidia owes its sales to the US the most at 47%, followed by Taiwan at 16% and China by 13%.

From fiscal 2023 to 2025, Nvidia’s sales to outside-US clients dropped from 69% to 53% of total revenue respectively, indicating that Nvidia will have to continue to work around US-imposed export controls.

Nvidia’s Next Wave of AI Scaling

The entire AI hype was built on Nvidia’s H100 chips, securing the company’s ~90% dominance in the AI training and infrastructure market. Even DeepSeek owes its training to H100 workloads, as somewhat nerfed H800s with similar compute power. Nvidia deployed these chips to circumvent the first wave of export controls in October 2022.

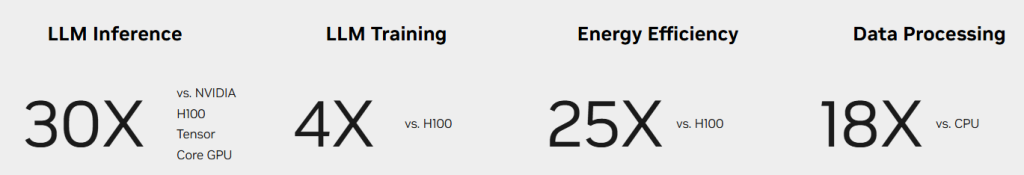

Now that the AI market is more mature, diversified and AI models are more robust in their outputs, hyperscalers will count on Nvidia’s Blackwell architecture. One such premium cluster, consisting of 36 CPUs and 72 GPUs, comes in the form of GB200 NVL72 solution, offering drastically greater performance than equivalent H100s.

Prior to DeepSeek reveal, Nvidia CEO Jensen Huang described Blackwell demand as “insane” in October. In the latest earnings call, Huang reiterated this bullish line, pointing to Blackwell demand as “extraordinary”.

Annualized, Nvidia’s revenue jumped by 114% for fiscal 2024 to $130.5 billion. Compared to the LSEG estimate of $38.05 billion, Nvidia topped it at $39.33 billion for the quarter, boosting shareholder confidence at $0.89 earnings per share (EPS) vs $0.84 expected.

Based on Blackwell demand, Nvidia now expects ~$43 million in Q1, with a 2% variation against the LSEG estimate of $41.78 billion. And just like ASML CEO, Huang too considers DeepSeek development as a boost to the entire AI sector.

“Future reasoning models can consume much more compute. DeepSeek-R1 has ignited global enthusiasm. It’s an excellent innovation. But even more importantly, it has open-sourced a world-class reasoning AI model.”

Nvidia CEO Jensen Huang in February’s earnings call

Investors should also consider that DeepSeek might actually increase compute power demand by inviting more experimentation with different models that adopt some of its optimized workflows.

Moreover, even if DeepSeek lowers the overall compute demand through efficiency improvements, it is exceedingly likely that text-to-video computation alone will push the demand envelope. According to Verified Market Research, text-to-video AI market size should have a CAGR of 36% between 2024 and 2031.

The Bottom Line

At first glance, the demand for AI data centers might appear inflated compared to the tangible gains achieved so far, especially given the hard problem of AI confabulation. However, it would be shortsighted to overlook the transformative potential of AI as a revolutionary technology.

The current push to build AI-powered systems is not a fleeting trend but a deliberate, strategic effort. AI’s applications are virtually limitless, ranging from mundane content generation to hegemony technology developed by Palantir (NASDAQ:PLTR).

In this context, Nvidia stands out as a first-mover with unmatched competitive advantage in this rapidly expanding sector. Since the initial wave of AI hype three years ago, Nvidia has consistently stayed ahead of the curve, with its Blackwell architecture now positioned as the next major upgrade cycle for the industry.

In turn, the Blackwell platform is poised to become the foundation for the next wave of AI application proliferation. Big Tech hyperscalers, through their cloud computing solutions, will drive this adoption, and Nvidia’s market leadership ensures it remains the primary beneficiary of the resulting economies of scale.

***

Neither the author, Tim Fries, nor this website, The Tokenist, provide financial advice. Please consult our website policy prior to making financial decisions.